Incident 142: Facebook’s Advertisement Moderation System Routinely Misidentified Adaptive Fashion Products as Medical Equipment and Blocked Their Sellers

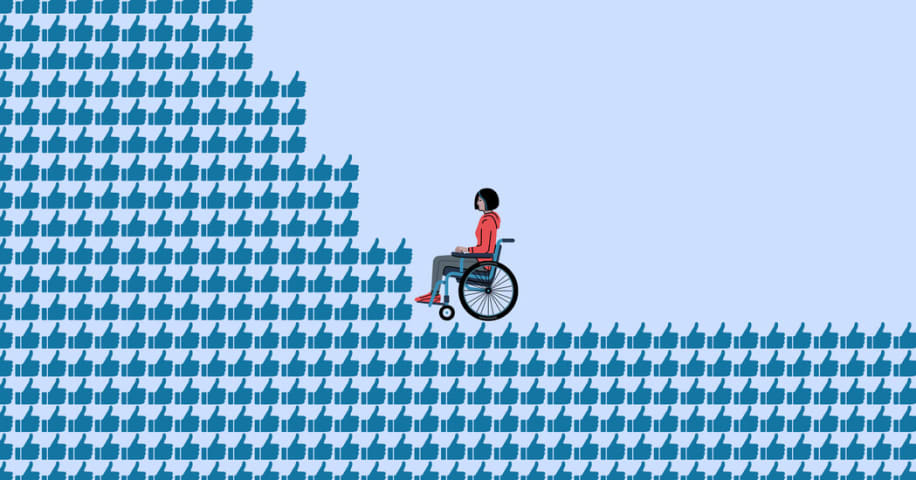

Description: Facebook platforms' automated ad moderation system falsely classified adaptive fashion products as medical and health care products and services, resulting in regular bans and appeals faced by their retailers.

Entities

View all entitiesAlleged: Facebook and Instagram developed and deployed an AI system, which harmed Facebook users of disabilities and adaptive fashion retailers.

Incident Stats

Incident ID

142

Report Count

1

Incident Date

2021-02-11

Editors

Sean McGregor, Khoa Lam

Incident Reports

Reports Timeline

nytimes.com · 2021

- View the original report at its source

- View the report at the Internet Archive

Earlier this year Mighty Well, an adaptive clothing company that makes fashionable gear for people with disabilities, did something many newish brands do: It tried to place an ad for one of its most popular products on Facebook.

The product…

Variants

A "variant" is an incident that shares the same causative factors, produces similar harms, and involves the same intelligent systems as a known AI incident. Rather than index variants as entirely separate incidents, we list variations of incidents under the first similar incident submitted to the database. Unlike other submission types to the incident database, variants are not required to have reporting in evidence external to the Incident Database. Learn more from the research paper.